Enterprise LLM Fine-Tuning Services

Transform a general-purpose AI model into a reliable, on-brand, policy-aligned enterprise assistant that improves accuracy, consistency, and productivity across teams.

Evaluation & Benchmark Validation

Hallucination Reduction Techniques

Domain-Specific Training Datasets

Continuous Model Re-Training Pipeline

Prompt + Fine-Tune Hybrid Optimization

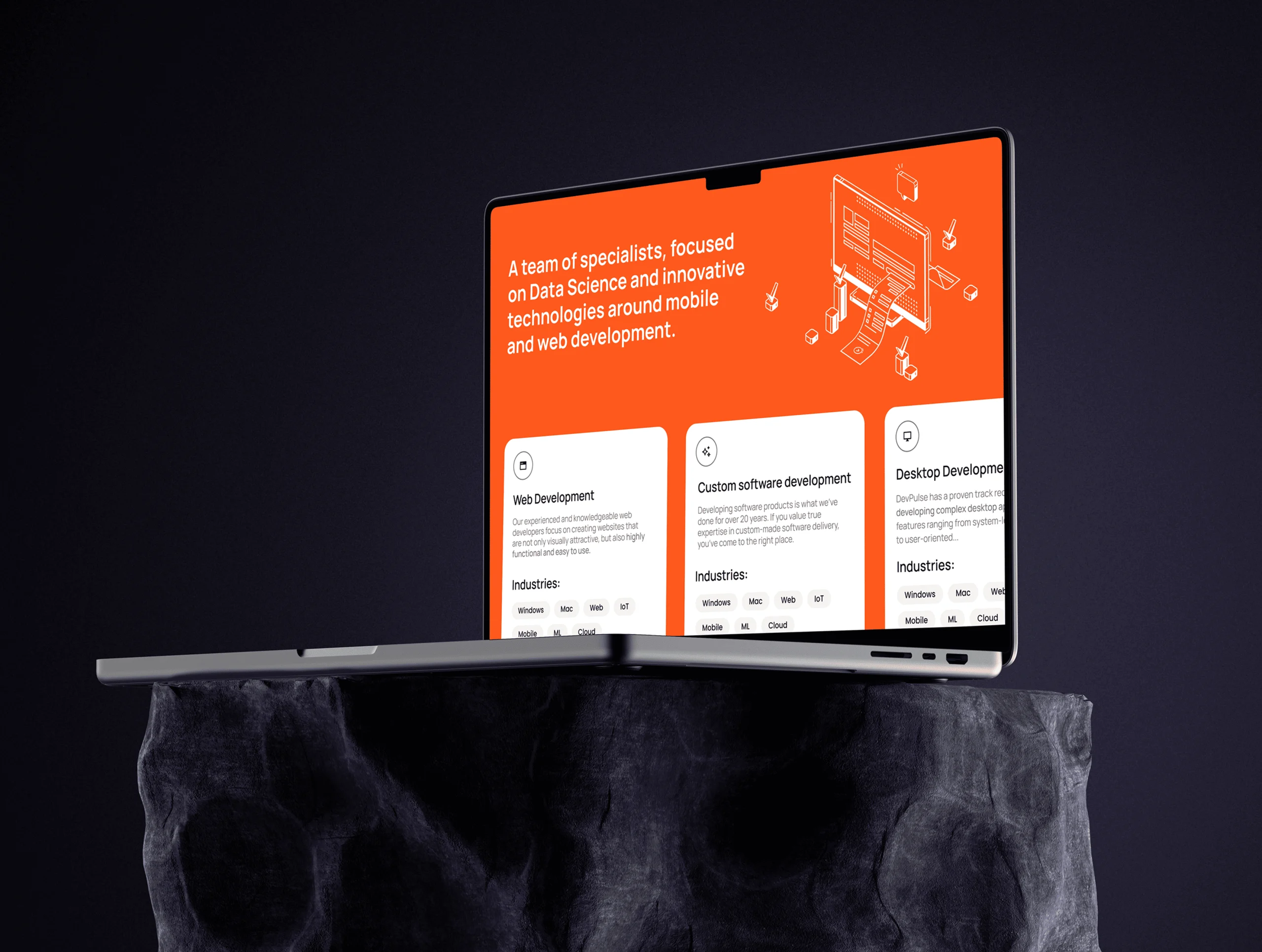

Enterprise LLM Fine-Tuning Services by devPulse

Organizations are under growing pressure to use AI in production workflows — yet generic models rarely match real business requirements. Teams need consistent tone, reliable decisions, and outputs aligned with internal standards, not answers that change every time.

Security and compliance teams require predictable behavior, product teams depend on repeatable formatting, and operations need AI that performs correctly without constant prompt adjustments.

devPulse adapts large language models to your domain, terminology, and operating rules. We fine-tune models to produce consistent, policy-aligned outputs — reducing review effort, operational friction, and risk while making AI dependable across everyday workflows.

The Business Problem

Enterprise AI must be predictable, compliant, and scalable — not just “impressive in a demo.”

In regulated workflows, the key challenge is consistent format and specialist-level writing that prompting and knowledge retrieval can’t reliably enforce.

Generic models also have recurring domain blind spots that drive rework, delays, and risk. And in latency-sensitive or restricted environments, relying on external lookups adds operational complexity. This is why enterprises fine-tune models to follow internal standards with consistency at scale.

Outcomes You Can Expect

Tell us one workflow you want AI to perform reliably — we’ll define measurable improvements and the safest path to production.

Fine-tuning turns a general model into an operational system that follows your language, rules, and workflows consistently across the organization.

domain accuracy

Improve relevance to your terminology, products, and workflows while reducing incorrect or off-context responses.

consistent brand voice

Standardize tone, phrasing, and positioning across teams, regions, and communication channels.

operational speed

Reduce time spent drafting, summarizing, responding, and triaging routine tasks.

predictable behavior

Align outputs with policies and minimize unexpected responses that introduce legal or compliance risk.

organization-wide scaling

Replace individual prompt expertise with a dependable capability usable across roles and departments.

lower operational cost

Reduce review effort and rework by producing usable first-pass outputs that require minimal human correction.

Start a fine-tuning assessment and understand where your current AI breaks in real workflows

Outcomes You Can Expect

A year ago, many teams believed fine-tuning was the default path to “make AI work for business.” Today, most enterprises start differently: they first try faster, cheaper ways to improve results—by refining instructions and connecting AI to internal knowledge. That approach is popular for a reason: it scales quickly, stays up-to-date as documents change, and is easier to iterate without long retraining cycles. But the story doesn’t end there.

Fine-tuning still becomes the right decision when the AI must behave like a specialist, not just “answer well”

01

When format and style must be consistent every time

Think legal text that reads like it was written by a notary, or clinical reports that must follow an approved template. Adding facts can’t teach “how to write like your organization”—and instructions alone often break under real-world variability.

02

When the model has repeatable weak spots

If the same types of mistakes keep happening—terminology confusion, recurring misinterpretations—targeted adaptation can reduce review effort and operational rework, especially in high-volume processes.

03

When you need autonomy and predictable speed

In restricted or latency-sensitive environments, relying on extra runtime steps can be undesirable. A model that “already knows how to behave” is simpler to operate and more dependable.

The enterprise reality: the strongest solutions are often hybrid - a model aligned to your standards, supported by up-to-date knowledge sources when needed.

Where Fine-Tuning Creates the Most Value

Practical AI use cases designed for regulated environments and embedded directly into your existing workflows — improving speed, consistency, and decision-making without compromising control or compliance.

Improve response quality and consistency, reduce escalations, and shorten resolution cycles.

Automate tagging, routing, risk detection, and structured decision tasks that require predictable outputs.

Support internal teams with policy-aligned guidance and drafting to reduce rework and review cycles.

Provide consistent answers and next-step guidance for internal processes across departments.

Create dependable first drafts for knowledge articles, internal updates, product messaging, and documentation.

Standardize outreach, proposals, follow-ups, and summaries aligned with your positioning.

Fine-Tuning vs Other Approaches

Combined approach - Common in enterprise: consistent behavior plus trusted knowledge sources.

How Delivery Works And What You Receive

Align goals and success metrics

We define what “better” means for your business: quality, speed, cost, and risk.

- Shared success criteria and a clear implementation direction

Baseline current performance

We measure existing AI behavior and workflow performance to create a before-and-after comparison.

- A benchmark report to prove real improvement

Adapt the model to your standards

We align outputs with your terminology, brand voice, and operational rules.

- A fine-tuned model that behaves according to your business requirements

Validate consistency and risk

We test edge cases and failure scenarios before broader usage.

- A rollout plan for teams and workflows plus measurable performance gains

Long-term governance

We establish maintenance and improvement processes.

- Governance guidance and a sustainable performance model

Want your LLM to follow your rules, not improvise every response?

Why Teams Choose devPulse for Production AI

We focus on making AI dependable in real workflows — not just impressive in demos — by ensuring consistent behavior, measurable results, and systems teams can safely rely on every day.

Production-first engineering

We design AI systems for reliability from day one — monitoring, fallbacks, and safe failure modes included, not added later.

Measurable outcomes, not experiments

Every engagement starts with baseline metrics and ends with verified improvement in accuracy, cost, or review effort.

Architecture that stays flexible

Models, providers, and pipelines remain replaceable components, so your system evolves without costly rebuilds.

Adoption beyond the demo

We optimize for real usage: predictable behavior, usable outputs, and workflows teams actually trust and keep using.

faq

Still have a question?

"In many cases companies don’t need more AI — they need AI that behaves predictably. We usually start by understanding the workflow and risks first, and only then recommend fine-tuning if it truly improves reliability and reduces operational effort."

Anna Tukhtarova

CTO of devPulse